Exploring the Enigmatic 'Null': A Deep Dive into Its Multifaceted Role Across Computing and Concepts

The term "null" frequently arises across diverse fields, from computer science to philosophy, often signifying an absence, an unknown, or an uninitialized state. While seemingly straightforward, its specific interpretation and behavior vary significantly depending on the context, making a precise understanding of "null" crucial for clarity and accuracy in many domains.

The Fundamental Nature of Null

At its core, null represents the absence of a meaningful value. It is distinct from zero, which is a specific numerical value; an empty string, which is a string containing no characters; or a boolean "false," which is a definitive logical state. Instead, null communicates that there is no value present or that a reference points to nothing. This fundamental distinction is critical in preventing logical errors and ensuring data integrity.

Null in Programming Languages: A Cornerstone of Computation

In the realm of computer programming, null plays a pivotal, albeit sometimes problematic, role. Its implementation and implications can differ considerably across languages.

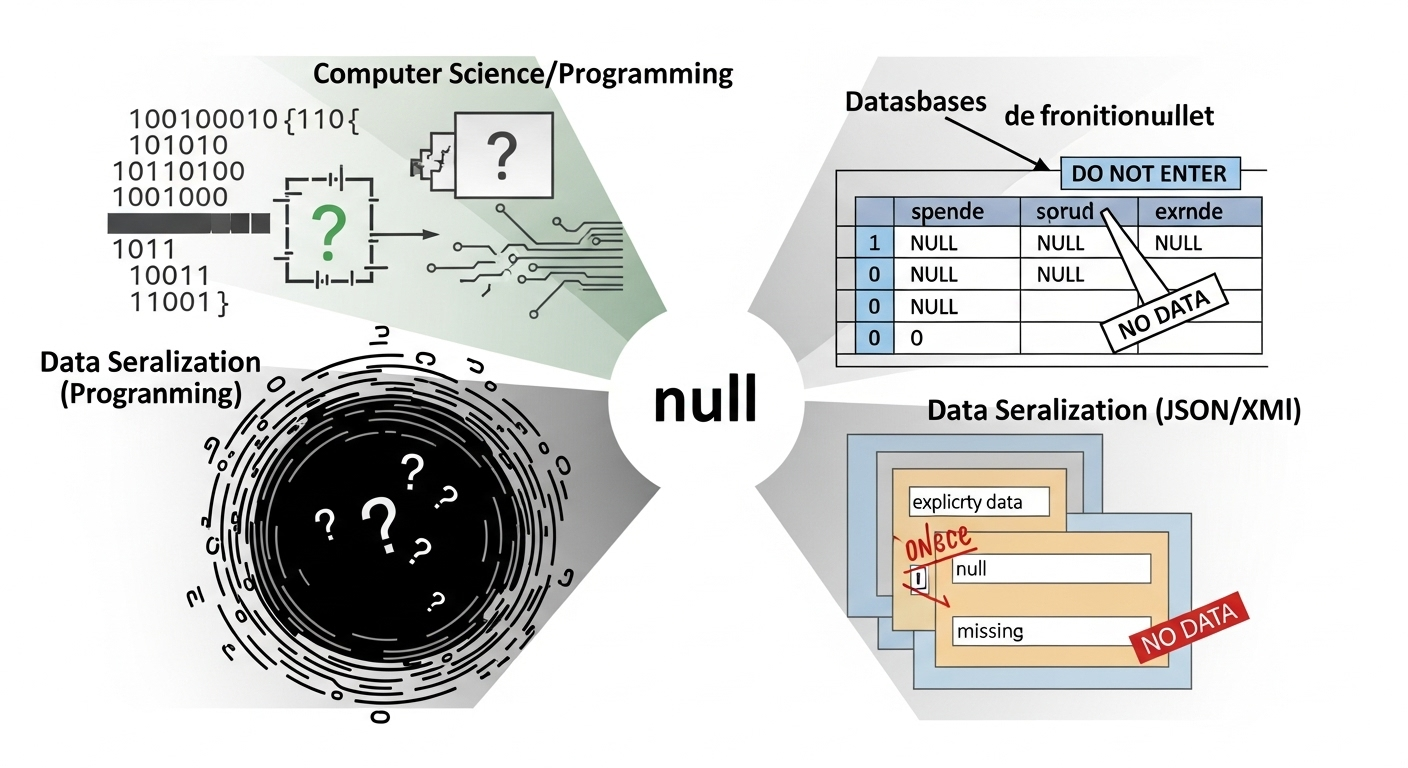

Pointers and References: The Ancestry of Null

In languages like C and C++, NULL (or more modernly, nullptr in C++) is primarily associated with pointers. A null pointer indicates that the pointer does not refer to any valid memory address. This is a common mechanism to signify that a variable has not yet been assigned a memory location or that a function has failed to allocate memory or locate an object.

- C/C++: The

NULLmacro traditionally expanded to an integer constant zero, but its semantic meaning is explicitly that of a null pointer. The introduction ofnullptrin C++11 provided a type-safe alternative, ensuring that null pointer constants are handled more rigorously by the compiler. Attempting to dereference a null pointer typically leads to a segmentation fault or an access violation, a critical runtime error.

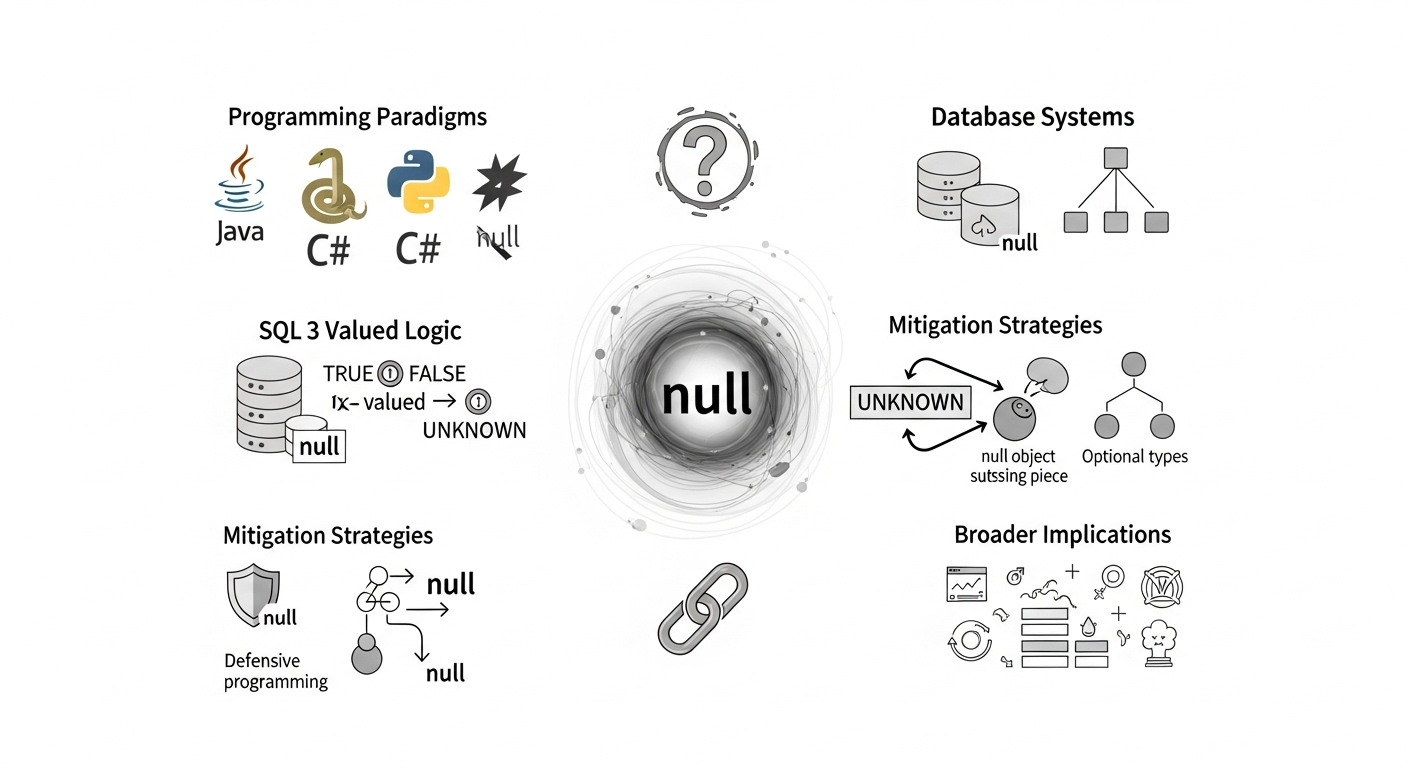

Similarly, in object-oriented languages such as Java and C#, null is used for object references. A null reference means that the reference variable does not currently point to any object in memory. This is distinct from an object that exists but has no data, such as an empty list or an object with all its fields set to default values. Operations performed on a null reference, such as calling a method or accessing a field, will typically result in a runtime error known as a "Null Pointer Exception" (NPE) in Java, or a "NullReferenceException" in C#.

Language-Specific Nuances of Null

Different programming paradigms and languages offer varied approaches to handling the concept of null:

- Java: Uses the keyword

null. While pervasive, Java has also introduced theOptionalclass as a way to explicitly convey the possible absence of a value, encouraging developers to handle the null case without relying solely on traditional null checks. - C#: Employs the keyword

null. Like Java, it faces challenges with null reference exceptions. C# 8.0 introduced nullable reference types (enabled by a compiler setting) to help track potential null values and reduce runtime errors, alongside existing nullable value types (e.g.,int?orNullable). - Python: Uses

Noneto represent the absence of a value.Noneis a singleton object, meaning there is only one instance ofNone. It behaves similarly to null in other languages, indicating no value or no object reference. - JavaScript: Features two distinct "empty" values:

nullandundefined.undefinedtypically means a variable has been declared but not assigned a value, or a function argument was not provided.null, conversely, often signifies an intentional absence of any object value. The distinction can be subtle and lead to different behaviors in type coercion and comparisons. - Ruby: Uses

nil, which is an instance of theNilClass. Like Python'sNone,nilrepresents nothingness. - Swift/Objective-C: Use

nil. Swift emphasizes safety with optional types (e.g.,String?), which explicitly indicate that a variable might or might not contain a value, compelling developers to safely unwrap them before use, thereby mitigating the risk of unexpected null-related crashes.

Challenges and Mitigations for Null in Programming

The presence of null, while essential for representing an uninitialized or absent state, introduces complexities. The "Billion Dollar Mistake" phrase, coined by Tony Hoare referring to the invention of the null reference, highlights the significant cost associated with the runtime errors it can cause.

To mitigate issues related to null, various strategies are employed:

- Defensive Programming: Explicitly checking for null before dereferencing a pointer or reference is a common practice. This involves `if (myObject != null)` conditions to prevent errors.

- Optional Types: Languages like Java (

Optional), C# (nullable reference types), and Swift (optionals) provide mechanisms to encapsulate a value that may or may not be present, forcing developers to handle both scenarios explicitly. This shifts the burden from runtime checks to compile-time guarantees. - Design by Contract: Specifying pre-conditions and post-conditions for methods, including whether parameters can be null and if return values might be null, can clarify expectations.

- Static Analysis Tools: Tools that analyze code for potential null dereference vulnerabilities before execution.

Null in Databases: Representing the Unknown

In relational databases, particularly those adhering to SQL standards, NULL holds a specific and crucial meaning: it represents a missing or unknown value within a column. It is not equivalent to an empty string, a zero, or a false boolean. Its behavior significantly impacts query results and data integrity.

SQL's Unique Interpretation of Null

- Comparisons: A common misconception is that

column = NULLwill identify rows where the column is null. In SQL, however, any comparison involving NULL (e.g.,= NULL,<> NULL,> NULL) evaluates to unknown, not true or false. To check for NULL values, one must use the specific predicatesIS NULLorIS NOT NULL. - Aggregate Functions: Most aggregate functions (e.g.,

SUM(),AVG(),COUNT()) by default ignore NULL values. For instance,AVG(column)will compute the average of only the non-NULL values in the column, effectively excluding rows where the value is unknown from the calculation. - Joins: When columns involved in a join condition contain NULL values, they will not match other NULL values or any non-NULL values, unless specific join types (like certain outer joins or explicit

IS NULLconditions) are used. - Constraints: The

NOT NULLconstraint ensures that a column must always contain a value and cannot be NULL. Primary key columns are implicitlyNOT NULLandUNIQUE. TheUNIQUEconstraint, however, often allows for multiple NULL values (as NULL is considered not equal to itself, hence distinct), though behavior can vary slightly across database systems.

Implications for Data Integrity and Querying

The specific handling of NULL in databases has profound implications for data modeling, query design, and data analysis. Misunderstanding NULL can lead to incorrect query results, missing data in reports, or unexpected behavior in applications interacting with the database. Careful consideration of whether a column should allow NULL values (i.e., whether the information can truly be unknown or irrelevant) is a fundamental aspect of good database schema design.

Null in Data Serialization: JSON and XML

When data is exchanged between systems, the representation of absent values is also critical. Data serialization formats have defined ways to express null.

- JSON (JavaScript Object Notation): JSON explicitly includes a

nullliteral. This means a property can have a value ofnull, clearly indicating that a value is present but empty or unknown, rather than the property being entirely absent. For example:{"name": "Alice", "age": null}. - XML (Extensible Markup Language): In XML, the absence of a value can be indicated by simply omitting an element or attribute. However, the XML Schema specification provides a mechanism for explicitly stating that an element is null using the

xsi:nil="true"attribute, wherexsirefers to the XML Schema instance namespace. This allows for an explicit representation of "nil-ability."

Conceptual and Philosophical Dimensions of Null

Beyond its technical definitions, the concept of null touches upon philosophical ideas of nothingness, absence, and non-existence. While often confused with zero in mathematics, null's role is distinct.

- Nothingness vs. Zero: Zero is a quantitative value on a scale, representing a quantity of nothing. Null, however, often signifies the absence of any value or quantity whatsoever, or a state of being unquantifiable or undefined in a given context. In mathematics, division by zero is undefined, indicating a mathematical "null" or invalid state for the operation.

- The Void: Philosophically, null can be seen as analogous to the void or a state of non-being, which has been contemplated across cultures and philosophical traditions for millennia. It raises questions about existence and non-existence.

Understanding these broader conceptual underpinnings can provide a richer perspective on why null behaves the way it does in technical systems and why its explicit handling is so important.

Conclusion

The concept of null, while deceptively simple, is a fundamental element in modern computing and data management. Its presence signifies an absence of value, an unknown state, or an uninitialized reference. Across programming languages, database systems, and data serialization formats, null is handled with specific rules and conventions that, if misunderstood, can lead to significant errors and inconsistencies. A comprehensive grasp of how null operates in different contexts, coupled with the adoption of best practices for its management, is essential for developing robust, reliable, and maintainable software systems and ensuring the integrity of data.